Brief Overview of the Importance of Testing in the Context of ML/AI

In the rapidly evolving world of technology, Machine Learning (ML) and Artificial Intelligence (AI) have emerged as transformative forces driving innovation across various industries. However, as these technologies become integral to critical applications, ensuring their reliability, accuracy, and fairness becomes paramount. This is where rigorous testing of ML/AI products comes into play.

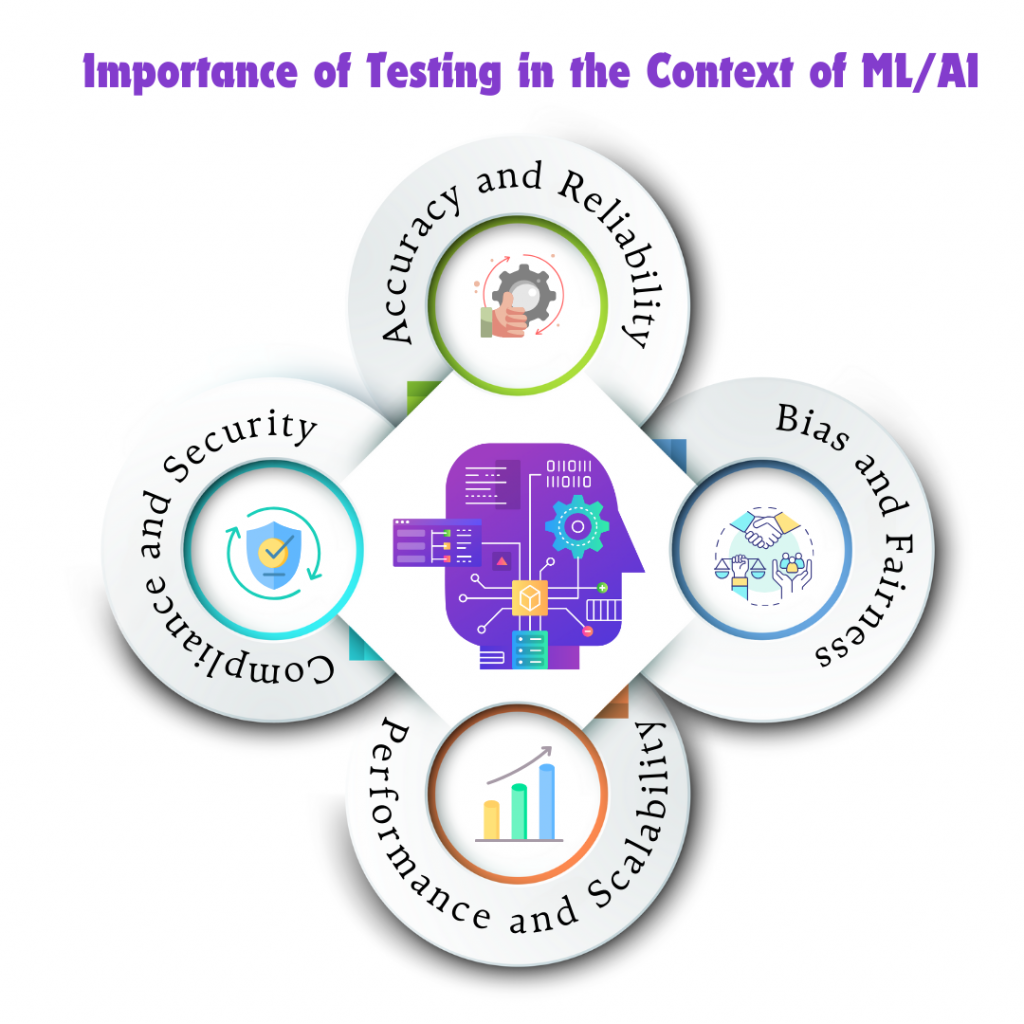

Testing in the context of ML/AI is essential for several reasons:

- Accuracy and Reliability: ML models are built on vast amounts of data and complex algorithms. Ensuring that these models make accurate predictions and perform reliably across different scenarios is crucial. Testing helps identify and rectify errors that could otherwise lead to incorrect outcomes, which can be particularly detrimental in sensitive applications like healthcare, finance, and autonomous driving.

- Bias and Fairness: ML models can inadvertently learn and perpetuate biases present in the training data. Without thorough testing, these biases can go unnoticed, leading to unfair and discriminatory outcomes. Testing for bias and fairness ensures that ML/AI products treat all users equitably and uphold ethical standards.

- Performance and Scalability: As ML/AI applications scale, maintaining performance under varying loads and conditions is vital. Testing helps assess how well a model performs under stress and ensures it can handle real-world usage without degradation.

- Compliance and Security: Regulatory compliance and security are critical in many industries. Testing ensures that ML/AI models adhere to relevant regulations and are secure against potential vulnerabilities and attacks.

Unique Challenges and Requirements of Testing ML/AI Products Compared to Traditional Software

Testing ML/AI products presents unique challenges that differ significantly from traditional software testing. These challenges stem from the inherent complexity and dynamic nature of ML/AI systems:

- Dynamic and Non-deterministic Behavior: Unlike traditional software, where outputs are predictable based on given inputs, ML models can produce different outcomes due to their probabilistic nature. This non-deterministic behavior makes it challenging to define expected results and create test cases.

- Data Quality and Variability: The performance of ML models heavily depends on the quality and representativeness of the training data. Testing must ensure that the data used is clean, accurate, and free from biases. Additionally, models need to be tested against diverse datasets to ensure they generalize well across different conditions.

- Evolving Models: ML models are often retrained and updated with new data to improve performance or adapt to changing environments. Continuous testing is required to validate these updates and ensure that changes do not introduce new errors or degrade existing functionality.

- Interpretability and Explainability: Understanding why an ML model makes a certain prediction can be difficult due to the complexity of the underlying algorithms. Testing needs to include checks for interpretability and explainability to ensure that the model’s decision-making process is transparent and justifiable.

- Bias Detection and Mitigation: Identifying and mitigating bias in ML models is a complex task. Testing must incorporate techniques to detect biases in training data and model predictions, ensuring that the model performs fairly across different user groups.

- Integration with Traditional Systems: ML/AI components are often integrated into larger systems that include traditional software. Testing must verify the seamless integration of these components and ensure that the overall system functions correctly and efficiently.

Testing ML/AI products requires a comprehensive approach that goes beyond traditional software testing methodologies. It demands a deep understanding of data quality, model behavior, and ethical considerations to ensure that ML/AI systems are accurate, fair, and reliable. By addressing these unique challenges, we can build trust in ML/AI technologies and unlock their full potential to drive positive change.

Importance of QA/Testing in ML/AI

Ensuring Accuracy and Reliability of ML Models

In the realm of Machine Learning (ML) and Artificial Intelligence (AI), accuracy and reliability are paramount. Unlike traditional software, where predefined rules dictate outcomes, ML models learn from data and evolve over time. This dynamic nature necessitates rigorous testing to ensure these models produce accurate and reliable predictions.

Testing plays a critical role in validating that the model’s predictions align with real-world outcomes. It involves assessing the model’s performance on various datasets, including unseen data, to verify its generalizability. By identifying and rectifying errors, testing helps prevent the deployment of models that could make incorrect predictions, which is especially crucial in high-stakes applications such as healthcare diagnostics, financial forecasting, and autonomous driving.

Importance of Testing for Bias, Fairness, and Ethical Considerations

One of the significant challenges in ML/AI is the potential for bias in models. Bias can arise from the training data, which may reflect historical prejudices or imbalances, leading to unfair and discriminatory outcomes. Testing for bias is essential to ensure that ML models do not perpetuate or amplify these biases.

Fairness testing involves evaluating the model’s performance across different demographic groups to ensure equitable treatment. For instance, a healthcare AI system should provide accurate diagnoses regardless of a patient’s race or gender. Ethical considerations also come into play, as ML/AI systems must operate transparently and justifiably. Ensuring that the model’s decision-making process can be explained and understood by stakeholders is vital for building trust and accountability.

Maintaining Performance and Scalability

As ML/AI applications grow and evolve, maintaining their performance and scalability becomes a critical aspect of QA/testing. Performance testing assesses the model’s efficiency in handling large volumes of data and making predictions within acceptable timeframes. This is particularly important for real-time applications, where latency can significantly impact user experience and decision-making.

Scalability testing ensures that the model can handle increasing amounts of data and a growing number of users without degradation in performance. This involves stress testing the system under various conditions to identify potential bottlenecks and optimize resource utilization. Continuous monitoring and testing are necessary to adapt to changing environments and data distributions, ensuring that the ML/AI system remains robust and efficient over time.

Understanding ML/AI Testing

Types of ML/AI Testing

Data Testing: Ensuring Data Quality and Integrity

Data is the lifeblood of ML/AI systems. The quality and integrity of the data used to train models directly impact their performance. Data testing involves verifying that the input data is accurate, complete, and free from anomalies. This process includes validating data formats, checking for missing or corrupted data, and ensuring that the data is representative of the real-world scenarios the model will encounter. By rigorously testing data, we can prevent garbage-in, garbage-out scenarios where poor-quality data leads to unreliable model outputs.

Model Testing: Evaluating the Performance and Accuracy of the Model

Model testing is crucial for assessing how well an ML model performs its intended tasks. This involves splitting data into training and testing sets, evaluating the model’s accuracy, precision, recall, and other performance metrics. Techniques such as cross-validation and A/B testing help in understanding the model’s robustness and generalizability. Model testing ensures that the model not only fits the training data well but also performs accurately on new, unseen data, thus avoiding issues like overfitting or underfitting.

Integration Testing: Verifying the Integration of ML Components with the Rest of the System

Integration testing focuses on ensuring that the ML components work seamlessly with the broader system. This involves verifying that data flows correctly from input sources to the model and that the model’s outputs are properly utilized by downstream systems. Integration testing helps identify issues that arise when different software components interact, ensuring that the entire system operates cohesively and efficiently.

Performance Testing: Assessing the Efficiency and Scalability of the ML Model

Performance testing evaluates the efficiency and scalability of ML models. It examines how quickly a model processes data and generates predictions, as well as how it performs under varying loads. This type of testing is critical for applications requiring real-time or near-real-time responses, such as fraud detection or autonomous driving. Scalability testing ensures that the model can handle increasing amounts of data and user requests without performance degradation, enabling the system to grow and adapt to rising demands.

Challenges in ML/AI Testing

Dynamic Nature of ML Models

One of the primary challenges in ML/AI testing is the dynamic nature of ML models. Unlike traditional software, where code changes are explicitly controlled, ML models continuously learn and evolve from new data. This dynamic behavior makes it difficult to predict outcomes and necessitates ongoing testing to ensure that model updates do not introduce new errors or degrade performance.

Handling Large Datasets

ML/AI systems often require large datasets to train and validate models effectively. Handling these massive datasets poses logistical challenges in terms of storage, processing power, and testing efficiency. Ensuring that tests run efficiently on large datasets without compromising thoroughness requires sophisticated data management and testing strategies.

Ensuring Unbiased and Fair Models

Bias in ML models can lead to unfair and discriminatory outcomes, which is a significant concern for ethical AI deployment. Ensuring unbiased and fair models involves testing for and mitigating biases in training data and model predictions. This requires comprehensive testing strategies that evaluate model performance across diverse demographic groups and scenarios, ensuring equitable treatment for all users.

Monitoring and Maintaining Model Performance Over Time

Once deployed, ML models must be continuously monitored and maintained to ensure sustained performance. Data distributions and real-world conditions can change, affecting model accuracy and reliability. Ongoing monitoring helps detect performance drifts and anomalies, prompting timely interventions such as model retraining or updates. Effective monitoring and maintenance are critical for long-term success and reliability of ML/AI systems.

Understanding the various types of ML/AI testing and the unique challenges they present is essential for developing robust, reliable, and ethical ML/AI products. By addressing these aspects comprehensively, we can ensure that ML/AI technologies deliver accurate, fair, and scalable solutions that meet the highest standards of quality and integrity.

Manual Testing of ML/AI Products

Role of Manual Testing

Verifying the Correctness of Data Preprocessing Steps

Manual testing plays a crucial role in ensuring the correctness of data preprocessing steps. Data preprocessing involves cleaning, transforming, and preparing raw data for use in ML models. This step is vital because any errors in preprocessing can propagate through the entire model, leading to inaccurate predictions. Manual testing helps verify that data transformations, such as normalization, encoding, and feature extraction, are performed correctly and that the data fed into the model is of high quality and suitable for training.

Performing Exploratory Testing on the Model’s Predictions

Exploratory testing involves manually probing the model’s predictions to uncover any unexpected behaviors or errors. By testing various inputs, testers can gain insights into how the model responds to different scenarios. This hands-on approach allows testers to identify edge cases and potential weaknesses that automated tests might miss. Exploratory testing is particularly useful for understanding the model’s behavior in real-world situations and ensuring it performs as expected across a wide range of inputs.

Validating the Model’s Behavior with Edge Cases and Uncommon Scenarios

Edge cases and uncommon scenarios can significantly impact the performance of ML models. Manual testing involves validating the model’s behavior under these conditions to ensure robustness. Testers create and run scenarios that push the model to its limits, such as inputs that are at the extreme ends of the data distribution or that contain unusual combinations of features. By validating the model against these challenging cases, testers can identify and address potential vulnerabilities, ensuring the model remains reliable in diverse situations.

Best Practices

Collaborating Closely with Data Scientists and ML Engineers

Effective manual testing requires close collaboration with data scientists and ML engineers. This collaboration ensures that testers have a deep understanding of the model’s design, assumptions, and intended use cases. By working together, testers can develop more effective test cases and gain insights into potential areas of concern. Regular communication and feedback loops between testers and ML practitioners help ensure that testing efforts align with the overall goals and requirements of the ML project.

Creating Comprehensive Test Cases Covering Various Aspects of the ML Lifecycle

To ensure thorough testing, it’s essential to create comprehensive test cases that cover various aspects of the ML lifecycle. This includes testing data preprocessing steps, model training and validation, and model deployment. Test cases should address different stages of the ML pipeline, from data ingestion to final predictions, and cover a range of scenarios, including normal operating conditions, edge cases, and failure modes. Comprehensive test cases help ensure that all critical aspects of the ML product are thoroughly evaluated.

Regularly Updating Test Cases as Models and Data Evolve

ML models and data evolve over time, necessitating regular updates to test cases. As new data becomes available and models are retrained or fine-tuned, test cases must be revised to reflect these changes. Regularly updating test cases ensures that testing remains relevant and effective, helping to catch new issues that may arise with updated models or data. Continuous testing and maintenance of test cases are essential for sustaining the quality and reliability of ML products over their lifecycle.

Manual testing is a vital component of the QA process for ML/AI products. By verifying data preprocessing steps, performing exploratory testing, and validating edge cases, manual testers play a crucial role in ensuring the accuracy, reliability, and robustness of ML models. Adopting best practices such as close collaboration with ML practitioners, creating comprehensive test cases, and regularly updating these cases helps maintain the quality and integrity of ML/AI systems.

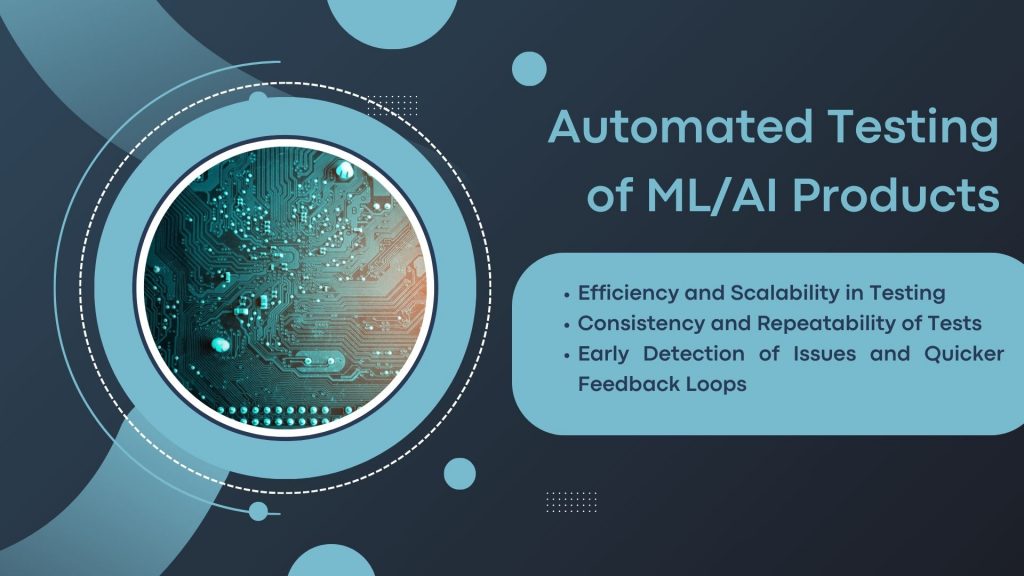

Automated Testing of ML/AI Products

Importance of Automation in ML/AI Testing

Efficiency and Scalability in Testing

Automation brings unparalleled efficiency and scalability to ML/AI testing. Manual testing can be labor-intensive and time-consuming, especially when dealing with large datasets and complex models. Automated testing tools can handle vast amounts of data and execute numerous test cases simultaneously, significantly reducing the time required for testing. This efficiency allows for more frequent testing cycles, ensuring that models are continually validated and refined as new data is introduced.

Consistency and Repeatability of Tests

One of the key advantages of automated testing is the consistency and repeatability it offers. Automated tests perform the same steps in the same order every time they are run, eliminating the variability and human error that can occur with manual testing. This consistency ensures that results are reliable and reproducible, which is crucial for validating the performance and reliability of ML models. By maintaining a standard testing process, automated tests help ensure that the model’s performance is consistently evaluated against predefined criteria.

Early Detection of Issues and Quicker Feedback Loops

Automated testing enables early detection of issues and provides quicker feedback loops. Automated tests can be integrated into the development pipeline, running continuously or at regular intervals. This continuous testing approach allows for the immediate identification of errors, performance degradations, or unexpected behaviors as soon as they occur. Early detection and rapid feedback enable developers and data scientists to address issues promptly, reducing the risk of deploying flawed models and improving the overall quality of ML/AI products.

Key Tools and Frameworks

Data Validation Tools

- TensorFlow Data Validation (TFDV): TFDV is a powerful tool for validating and analyzing data used in ML models. It helps identify anomalies, missing values, and inconsistencies in the data, ensuring that the data pipeline produces high-quality inputs for model training.

- Great Expectations: Great Expectations is an open-source tool that helps automate and document data testing. It allows users to define, execute, and validate data expectations, ensuring that the data meets specified quality standards before being used for training or inference.

Model Validation Tools

- TensorFlow Model Analysis (TFMA): TFMA is a library for evaluating the performance of TensorFlow models. It enables detailed analysis of model metrics, such as accuracy, precision, recall, and fairness across different slices of data, helping to ensure that models perform well and are unbiased.

- PyTorch Test Framework: PyTorch offers various testing utilities and frameworks that facilitate the validation and evaluation of PyTorch models. These tools help automate the process of testing model performance and ensuring that models meet the desired accuracy and robustness criteria.

Automation Frameworks

- Selenium: Selenium is a widely-used framework for automating web applications for testing purposes. It can be used to automate the testing of ML models that have web-based interfaces, ensuring that the front-end and back-end components work seamlessly together.

- Robot Framework: Robot Framework is an open-source automation framework that supports keyword-driven testing. It is extensible and can be integrated with various testing libraries, making it suitable for automating different aspects of ML/AI testing.

- Apache JMeter: Apache JMeter is a performance testing tool that can be used to test the scalability and efficiency of ML models. It allows for simulating large-scale loads and measuring the performance of models under different conditions.

Developing Automated Test Scripts

Writing Scripts to Validate Data Quality

Automated test scripts for data quality validation are essential for ensuring that the data used in ML models is accurate and reliable. These scripts can check for data completeness, consistency, and conformity to expected formats. By automating these checks, data quality issues can be detected early, preventing them from affecting model performance.

Automating Model Evaluation and Performance Checks

Automated model evaluation scripts help streamline the process of assessing model performance. These scripts can run predefined tests to evaluate metrics such as accuracy, precision, recall, F1 score, and more. By automating performance checks, organizations can ensure that models meet the required standards before deployment, reducing the risk of errors in production environments.

Continuous Integration and Continuous Deployment (CI/CD) for ML Models

Implementing CI/CD pipelines for ML models involves automating the entire lifecycle from development to deployment. Automated test scripts are integrated into the CI/CD pipeline to validate data, evaluate model performance, and ensure that updates do not introduce new issues. This approach enables rapid and reliable deployment of ML models, ensuring that they are always up-to-date and performing optimally.

Automated testing is a critical component of the QA process for ML/AI products, offering efficiency, consistency, and early issue detection. By leveraging key tools and frameworks and developing robust automated test scripts, organizations can ensure the quality, reliability, and scalability of their ML models. Implementing automation in ML/AI testing not only enhances the testing process but also accelerates the development and deployment of high-quality AI solutions.

Testing Strategies for ML/AI Products

Data Quality Assurance

Ensuring Data Completeness, Consistency, and Correctness

Data quality is the foundation of reliable ML/AI models. Ensuring data completeness involves verifying that all necessary data points are present and that there are no missing values. Consistency checks ensure that the data adheres to expected formats and ranges, while correctness involves validating that the data accurately represents the real-world scenarios it is meant to model. Implementing these checks early in the data pipeline helps prevent errors that could compromise the model’s performance.

Techniques for Detecting and Handling Missing or Corrupted Data

Detecting and handling missing or corrupted data is crucial for maintaining data quality. Techniques such as data imputation, where missing values are filled in using statistical methods or predictive models, help address gaps in the data. Outlier detection methods can identify and correct corrupted data points that deviate significantly from the norm. Automated data validation tools, like TensorFlow Data Validation (TFDV) and Great Expectations, can be employed to continuously monitor and clean the data, ensuring it remains accurate and usable for model training.

Model Performance Evaluation

Metrics for Evaluating Model Accuracy, Precision, Recall, F1 Score, etc.

Evaluating model performance involves assessing various metrics to determine how well the model predicts outcomes. Key metrics include:

- Accuracy: The proportion of correctly predicted instances out of the total instances.

- Precision: The proportion of true positive predictions out of all positive predictions.

- Recall (Sensitivity): The proportion of true positive predictions out of all actual positives.

- F1 Score: The harmonic mean of precision and recall, providing a balanced measure of the model’s performance.

These metrics provide a comprehensive view of the model’s effectiveness and help identify areas for improvement.

Techniques for Handling Overfitting and Underfitting

Overfitting occurs when a model performs well on training data but poorly on unseen data, while underfitting happens when a model fails to capture the underlying patterns in the data. Techniques to address these issues include:

- Cross-Validation: Splitting the data into multiple training and validation sets to ensure the model generalizes well.

- Regularization: Adding penalties to the model to prevent it from fitting the noise in the training data.

- Pruning: Simplifying complex models to avoid overfitting.

- Early Stopping: Halting the training process once the model’s performance on the validation set starts to degrade.

By implementing these techniques, models can achieve better generalization and robustness.

Bias and Fairness Testing

Identifying and Mitigating Bias in Training Data and Models

Bias in ML models can lead to unfair and discriminatory outcomes. Identifying bias involves analyzing the training data and model predictions to detect any disproportionate errors or outcomes affecting specific groups. Techniques for mitigating bias include:

- Rebalancing Training Data: Ensuring the training data is representative of the diverse populations it serves.

- Fairness Constraints: Incorporating fairness criteria into the model training process to ensure equitable treatment.

- Adversarial Debiasing: Using adversarial training techniques to reduce bias in model predictions.

These strategies help create more inclusive and fair ML/AI systems.

Ensuring Fairness Across Different Demographics and Subgroups

Ensuring fairness requires validating that the model performs equitably across various demographics and subgroups. This involves:

- Disaggregated Analysis: Evaluating model performance separately for different groups to identify disparities.

- Fairness Metrics: Using metrics like disparate impact, equal opportunity, and demographic parity to measure fairness.

- Bias Audits: Conducting regular audits to assess and address any emerging biases.

By focusing on fairness, ML/AI products can better serve diverse user bases and uphold ethical standards.

Monitoring and Maintenance

Setting Up Monitoring for Model Performance in Production

Continuous monitoring of model performance in production is essential to ensure sustained accuracy and reliability. Monitoring involves tracking key performance metrics and alerting stakeholders to any significant deviations or performance drops. Tools like TensorFlow Model Analysis (TFMA) and custom monitoring scripts can automate this process, providing real-time insights into model health and performance.

Strategies for Model Retraining and Updating

Models can degrade over time as data distributions change. Implementing strategies for model retraining and updating helps maintain performance. These strategies include:

- Scheduled Retraining: Regularly retraining models on new data to keep them up-to-date.

- Active Learning: Incorporating new data points identified as challenging or important into the training set.

- Continuous Integration/Continuous Deployment (CI/CD): Automating the retraining and deployment process to ensure models are always operating at peak performance.

By adopting these strategies, organizations can ensure their ML/AI products remain effective and reliable over their lifecycle.

Implementing robust testing strategies for ML/AI products is essential for ensuring data quality, model performance, fairness, and ongoing reliability. By focusing on these critical aspects, organizations can develop high-quality ML/AI solutions that deliver accurate, fair, and consistent results.

Conclusion

Summary of Key Points

Comprehensive QA and testing are essential for the success and reliability of ML/AI products. Rigorous testing ensures data quality, accurate model performance, and fairness, which are all critical for building trust and effectiveness in AI systems. Throughout this discussion, we have explored various aspects of QA/testing, including the importance of data quality assurance, model performance evaluation, bias and fairness testing, and continuous monitoring and maintenance. Both manual and automated testing approaches play vital roles in addressing these areas:

- Manual Testing: Verifies data preprocessing steps, performs exploratory testing on model predictions, and validates edge cases and uncommon scenarios.

- Automated Testing: Enhances efficiency, scalability, and consistency. It involves tools and frameworks for data validation, model evaluation, and the integration of continuous testing within CI/CD pipelines.

By combining these approaches, organizations can ensure their ML/AI products are robust, reliable, and ethical.

Future Trends in ML/AI Testing

The field of ML/AI testing is rapidly evolving, with new tools and techniques continually emerging. These advancements promise to enhance the effectiveness and efficiency of QA/testing processes:

- Emerging Tools and Techniques: The development of advanced data validation tools, more sophisticated model evaluation frameworks, and automation solutions is transforming how we test ML/AI products. Innovations in explainable AI (XAI) and interpretable machine learning are also becoming crucial for understanding model behavior and ensuring transparency and fairness.

- The Evolving Role of QA: As ML/AI technologies advance, the role of QA in this landscape is becoming increasingly complex and critical. QA professionals must stay updated with the latest developments, adapt to new testing paradigms, and collaborate closely with data scientists and ML engineers. Continuous learning and skill development will be essential to keep pace with the fast-changing ML/AI ecosystem.

The importance of comprehensive QA/testing for ML/AI products cannot be overstated. By leveraging both manual and automated testing approaches, organizations can ensure their AI systems are accurate, reliable, and fair. As the field of ML/AI testing continues to evolve, staying informed about emerging tools and techniques and adapting to new challenges will be key to maintaining high-quality AI solutions.