In the realm of digital innovation, big data has emerged as a cornerstone, empowering businesses to glean invaluable insights from vast pools of information. However, amidst the proliferation of data, ensuring its accuracy, reliability, and performance becomes paramount. This is where the significance of a robust big data testing strategy comes into play. In this article, we delve into the intricacies of big data testing, uncovering essential techniques and best practices to ensure seamless data operations and optimal performance.

Understanding Big Data Testing: A Fundamental Overview

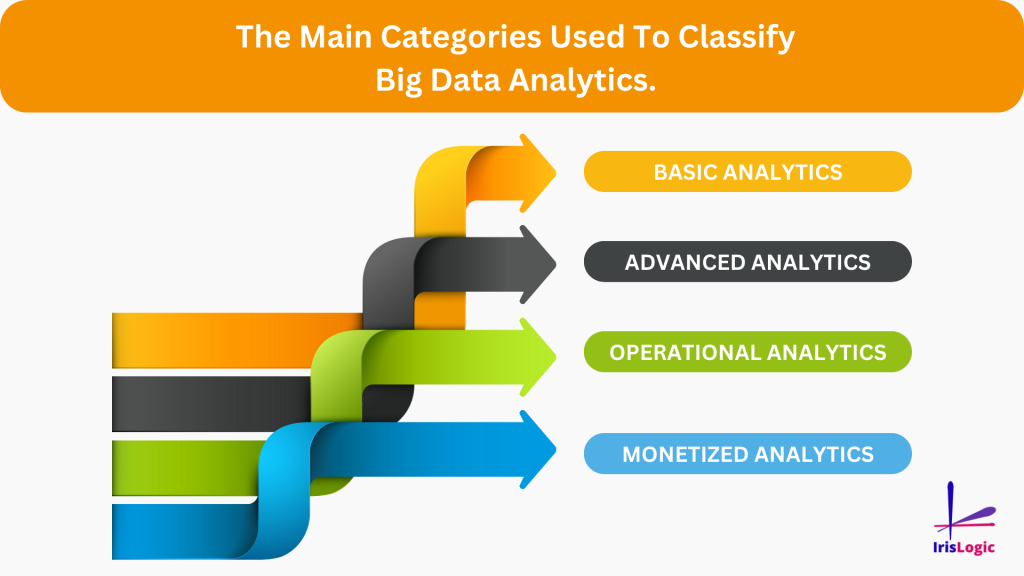

Big data testing encompasses a set of methodologies and processes aimed at validating the quality, integrity, and usability of large datasets. Unlike traditional testing approaches, which primarily focus on functional aspects, big data testing extends its scope to encompass various dimensions, including volume, velocity, variety, and veracity. By meticulously scrutinizing these facets, organizations can mitigate risks, enhance data quality, and unlock the true potential of their data assets.

Key Challenges in Big Data Testing: Overcoming the Hurdles

Despite its transformative potential, big data testing presents several unique challenges that warrant careful consideration. These challenges include:

1. Scalability: As data volumes continue to escalate exponentially, traditional testing frameworks struggle to accommodate the sheer scale of big data environments.

2. Diversity of Data Sources: Big data ecosystems often comprise a diverse array of data sources, ranging from structured databases to unstructured sources like social media feeds and sensor data.

3. Real-time Processing: With the proliferation of real-time analytics, organizations must ensure that their testing methodologies can effectively validate data streams in a timely manner.

4. Data Quality Assurance: Maintaining data integrity and quality is paramount, necessitating robust validation techniques to identify anomalies, inconsistencies, and inaccuracies.

5. Performance Optimization: Big data applications must deliver high-performance outcomes, necessitating performance testing to evaluate response times, throughput, and scalability under varying workloads.

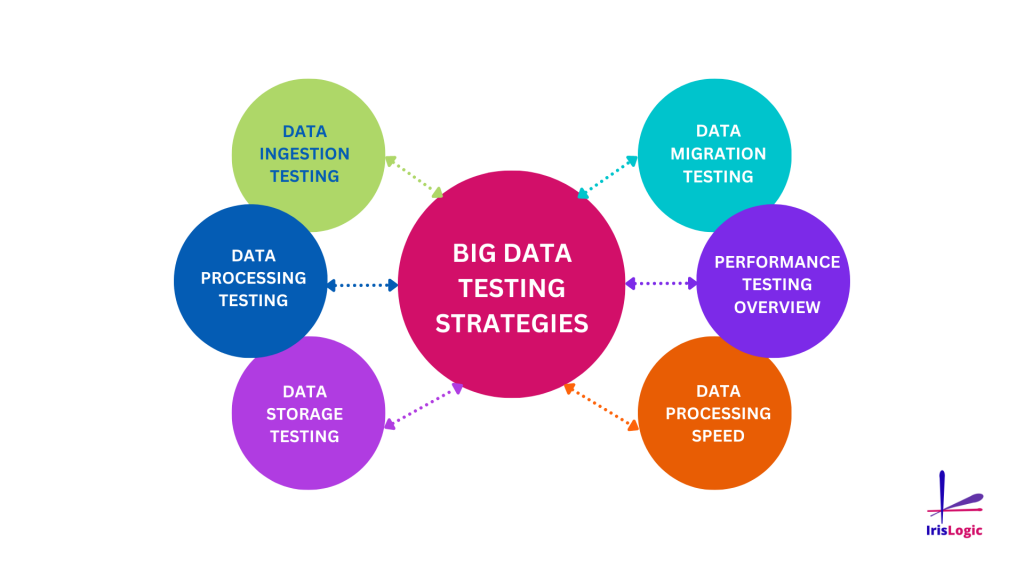

Crafting an Effective Big Data Testing Strategy: Best Practices and Techniques

To surmount these challenges and ensure the efficacy of big data initiatives, organizations must adopt a comprehensive testing strategy encompassing the following best practices:

1. Define Clear Testing Objectives: Establish clear testing objectives aligned with business goals, delineating the scope, success criteria, and key performance indicators (KPIs) for the testing process.

2. Embrace Automation: Leverage automation tools and frameworks to streamline testing workflows, enhance efficiency, and accelerate time-to-market for big data applications.

3. Implement Data Generation Techniques: Employ synthetic data generation techniques to create diverse datasets representative of real-world scenarios, enabling comprehensive testing across varied use cases.

4. Harness Parallel Processing: Embrace parallel processing techniques to expedite test execution and optimize resource utilization in distributed big data environments.

5. Emphasize Data Validation: Prioritize data validation methodologies, including schema validation, data profiling, and anomaly detection, to uphold data integrity and quality throughout the testing lifecycle.

6. Conduct Performance Testing: Perform rigorous performance testing to assess the scalability, responsiveness, and throughput of big data applications under different load conditions, ensuring optimal performance across diverse scenarios.

7. Foster Collaboration: Promote cross-functional collaboration between development, testing, and operations teams to foster synergy, share insights, and address testing challenges effectively.

Conclusion: Elevating Big Data Testing to New Heights

In the era of digital transformation, big data represents a goldmine of opportunities for organizations seeking to gain a competitive edge. However, realizing the full potential of big data hinges upon the efficacy of testing methodologies deployed to validate and ensure its integrity, reliability, and performance. By embracing a comprehensive testing strategy encompassing automation, performance optimization, and data validation, organizations can navigate the complexities of big data ecosystems with confidence, unlocking actionable insights and driving innovation at scale.