Big data is a collection of large datasets that cannot be processed using traditional computing techniques. Testing of these datasets involves various tools, techniques, and frameworks to process. Big data relates to data creation, storage, retrieval and analysis that is remarkable in terms of volume, variety, and velocity.

@IrisLogic, we believe Testing Big Data application is more verification of its data processing rather than testing the individual features of the software product. When it comes to Big data testing, performance and functional testing are the keys. In Big data testing, IrisLogic QA engineers verify the successful processing of terabytes of data using commodity cluster and other supportive components. It demands a high level of testing skills as the processing is very fast. Processing may be of three types.

We believe data quality is an important factor in Hadoop & Spark testing. Before testing the application, it is necessary to check the quality of data and should be considered as a part of database testing. It involves checking various characteristics like conformity, accuracy, duplication, consistency, validity, data completeness, etc.

- Check proper data in pulled into system

- Compare source data with the data landed on Hadoop

- Check right data is extracted and loaded into correct HDFS location

- Data aggregation and segregation rules are implemented on through data

- Key Value pairs are generated

- Validating the data after MapReduce or Spark or Tez process

….and much more

All steps of data collection, aggregation, segregation, process and UI testing can be automated. IrisLogic has been working on this for years and we have teams of engineers and readymade framework written using Python and Java/Selenium to help. We can provide end to end BigData QA Automation testing for :

- Data Verification and Validation (structured or unstructured)

- Data Processing

- Perfomance Testing

- Integration Testing

- Functional Testing

- UI Testing of Dashboard and Reports

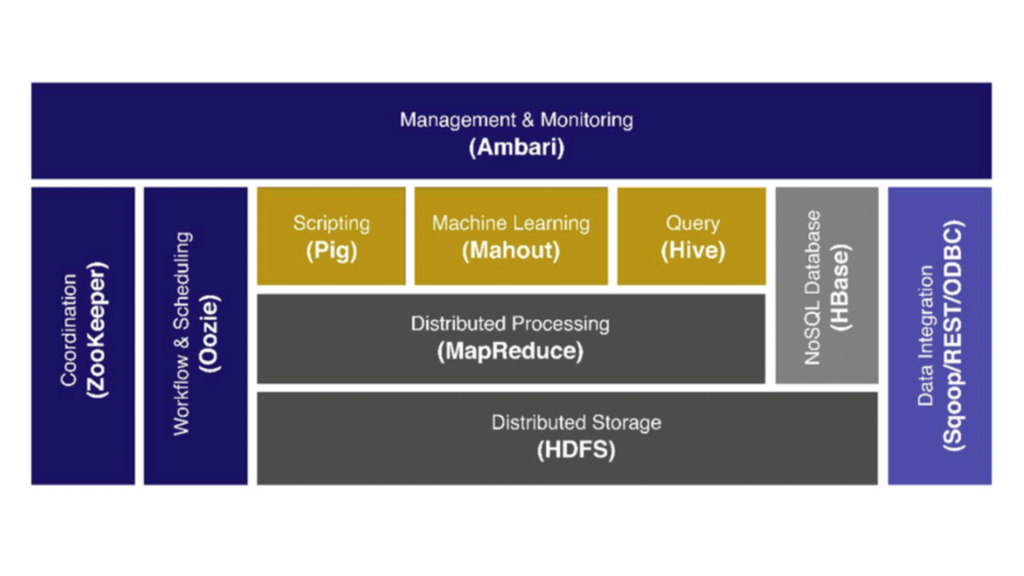

We are well versed in all areas of MapReduce (Hadoop, Hive, Pig, Oozie, MapReduce, Kafka, Flume), Storage (S3 , HDFS), Servers (Elastic, Heroku, Google App, EC2), Processing (R, Yahoo! pipes, Mechanical Turks), NoSQL (ZooKeeper, HBase, Hive, MongoDB).

As data engineering and data analytics advances to a next level, Big data testing is inevitable. Big data processing could be Batch, Real-Time, or Interactive. 3 stages of Testing Big Data applications are Data staging validation, “MapReduce” validation, Output validation phase. Architecture Testing is the important phase of Big data testing, as poorly designed system may lead to unprecedented errors and degradation of performance. Performance testing for Big data includes verifying Data throughput, Data processing, and Sub-component performance. Big data testing is very different from Traditional data testing in terms of Data, Infrastructure & Validation Tools. Big Data Testing challenges include virtualization, test automation and dealing with large dataset. Performance testing of Big Data applications is also an issue.

IrisLogic is perfect partner to navigate you through this journey of BigData Application and Infrastructure Manual and Automated testing using state of the art framework and expertise.